Today I built a python script that takes a csv file with a list of emails and using the Gmail API, moves the emails to the corresponding folder I’d like to move them to. Then it archives them.

Now obviously you can do this in Gmail with an automated filter for incoming emails, so the reason I’ve done this is so occasionally, probably once every other week, I’ll check all the emails in my inbox and I move emails (99% of the time to the same folders).

I wanted to automate this cleaning process without using SaneBox and I did try to use Make.com to do the same thing, but this was honestly simpler and I can run it without the additional operations cost.

Here’s the csv format for the headers and for the Folders – I couldn’t get it to link via the name so I have it cross-reference the Label ID to match the folders:

email, label

example_email@yahoo.com, Label_55

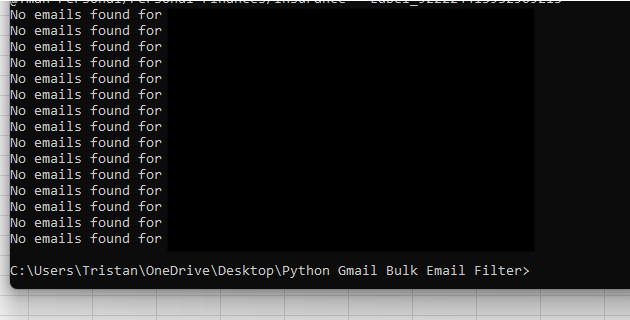

This is what the output looks like:

Here’s my code if anyone wants to make their own python script:

import os

import pickle

import pandas as pd

import base64

from google.oauth2.credentials import Credentials

from google.auth.transport.requests import Request

from googleapiclient.discovery import build

from googleapiclient.errors import HttpError

from google_auth_oauthlib.flow import InstalledAppFlow

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

# Step 1: Authentication and Service Initialization

def authenticate_gmail_api():

SCOPES = ['https://www.googleapis.com/auth/gmail.modify']

creds = None

if os.path.exists('token.pickle'):

with open('token.pickle', 'rb') as token:

creds = pickle.load(token)

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(

'credentials.json', SCOPES)

creds = flow.run_local_server(port=0)

with open('token.pickle', 'wb') as token:

pickle.dump(creds, token)

service = build('gmail', 'v1', credentials=creds)

return service

# Step 2: Read Filters from CSV File

def read_filters_from_csv(csv_file):

filters_df = pd.read_csv(csv_file)

return filters_df

# Step 3: Search for Matching Emails

# Step 3: Search for Matching Emails

def search_emails(service, query):

try:

# Modify the query to explicitly search only within the INBOX

query = f'label:inbox {query}'

results = service.users().messages().list(userId='me', q=query).execute()

messages = results.get('messages', [])

return messages

except HttpError as error:

print(f'An error occurred: {error}')

return []

# Step 4: Apply Filters and Move Emails

def apply_filters(service, filters_df):

for index, row in filters_df.iterrows():

email_filter = row['email']

label = row['label']

query = f'from:{email_filter}'

messages = search_emails(service, query)

if not messages:

print(f'No emails found for {email_filter}')

continue

for message in messages:

msg_id = message['id']

# Move email to the specified folder (label)

service.users().messages().modify(

userId='me',

id=msg_id,

body={'addLabelIds': [label], 'removeLabelIds': ['INBOX']}

).execute()

print(f'Email from {email_filter} moved to {label} and archived.')

# Step 5: Retrieve and List Label IDs

def list_labels(service):

results = service.users().labels().list(userId='me').execute()

labels = results.get('labels', [])

if not labels:

print('No labels found.')

else:

print('Labels:')

for label in labels:

print(f"{label['name']} - {label['id']}")

# Step 6: Main Function

def main():

# Authenticate and create the Gmail API service

service = authenticate_gmail_api()

# Uncomment the line below to list all labels and their IDs

list_labels(service)

# Load filter rules from CSV

csv_file = 'filters.csv' # Replace with your CSV file

filters_df = read_filters_from_csv(csv_file)

# Apply filters to emails

apply_filters(service, filters_df)

if __name__ == '__main__':

main()